XR Projects

In this section I will showcase the three main XR projects that I have been working on including my dissertaion project which is a mixed reality strategy game in which the player can use hand tracking or controllers.

My dissertation breif was to make a mixed reality strategy game, I decided to then base my question on congnitive load and how it impacts the user when using controllers vs hand tracking.

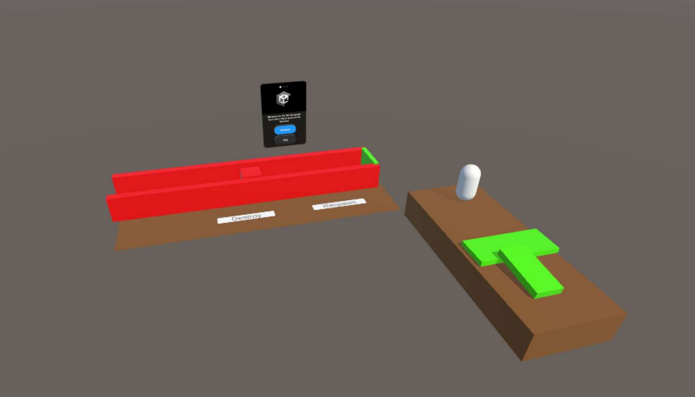

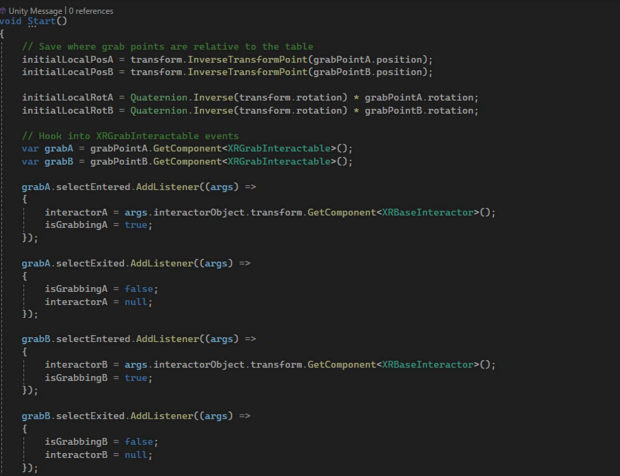

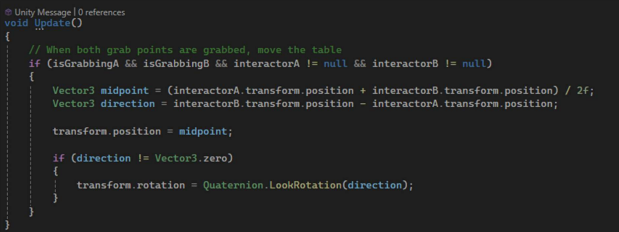

There are multiple different ways to create this feature but each have different effects and attributes. This script in figure 4 is designed to manage the two grab points of the table. It initialises by capturing the local positions and rotations of two grab points (grabPointA and grabPointB) relative to the main interactor. This information is critical for maintaining the spatial relationship between the grab points Greenwich University Final Year Project Andrew Steer P a g e | 16 and the interactor when the user interacts with it. The script then retrieves the XRGrabInteractable components associated with each grab point and registers event listeners for interaction events (this is the best way to implements a custom event into the XR toolkit). It then listens for selectEntered and selectExited events to detect when each grab point is picked up or released by the interactor. When a grab point is selected, it stores the interactor reference and sets a corresponding flag (isGrabbingA or isGrabbingB) to true. When the interaction ends, these values are reset. This setup allows the application to track whether one or both grab points are being held, which is essential for enabling two-handed object manipulation and is what this feature needs to function. This feature had many issues such as scaling each object differently and the grab points snapping to a position that was difficult for the player to use. Using the hierarchy and managing the scale of objects is very important as the parent needs to have uniformed scale for the XR grab interactable to work.

This project is a Virtual reality colouring game which I made in a team of five students. My role on this project was programming and also making sure the core components of the XR toolkit would work with what we were creating. This was tricky as we found the XR toolkit isn’t that well documented in terms of creating your own code and assets. There were also some difficulties getting UI to function, seen above on each side of the palette there are two arrows which allow the user to select different colours and being ablout to have the XR controllers inteact precisely with the UI was difficult.

My Dissertation

In depth breakdown of some of the components of this project

This image highlights several modifications made to the game, including the removal of visual ladders and trampolines. These elements posed significant technical challenges, particularly in ensuring the character controller could accurately identify the target while navigating vertical objects such as ladders. As a result, a simpler task was devised for playtesting, one that retained the strategic elements of gameplay and accommodated the evaluation of both hand tracking and controller interaction methods. This adjustment was essential to streamline the testing process while preserving the core objectives of the research.